AI System Patterns #1 - Parallel Conversations

by Rich Jones

This is the first in our open-ended series highlighting some patterns of AI Systems. If you missed our recent launch announcement, check that out here first to get some idea of what we're all about.

In this post, we'll be exploring what we're calling the "Parallel Conversation" pattern. This is probably the simplest pattern we'll explore, and it doesn't yet get into the fun world of feedback and self-oscillation, but the utility of this pattern should be immediately apparent.

The fundamental idea is quite simple - one chat agent interacts with a user, and other agents spy on the conversation and extract information about it for other purposes. We are using ChatGPT for convenience here, but it could also be accomplished with any competent LLM.

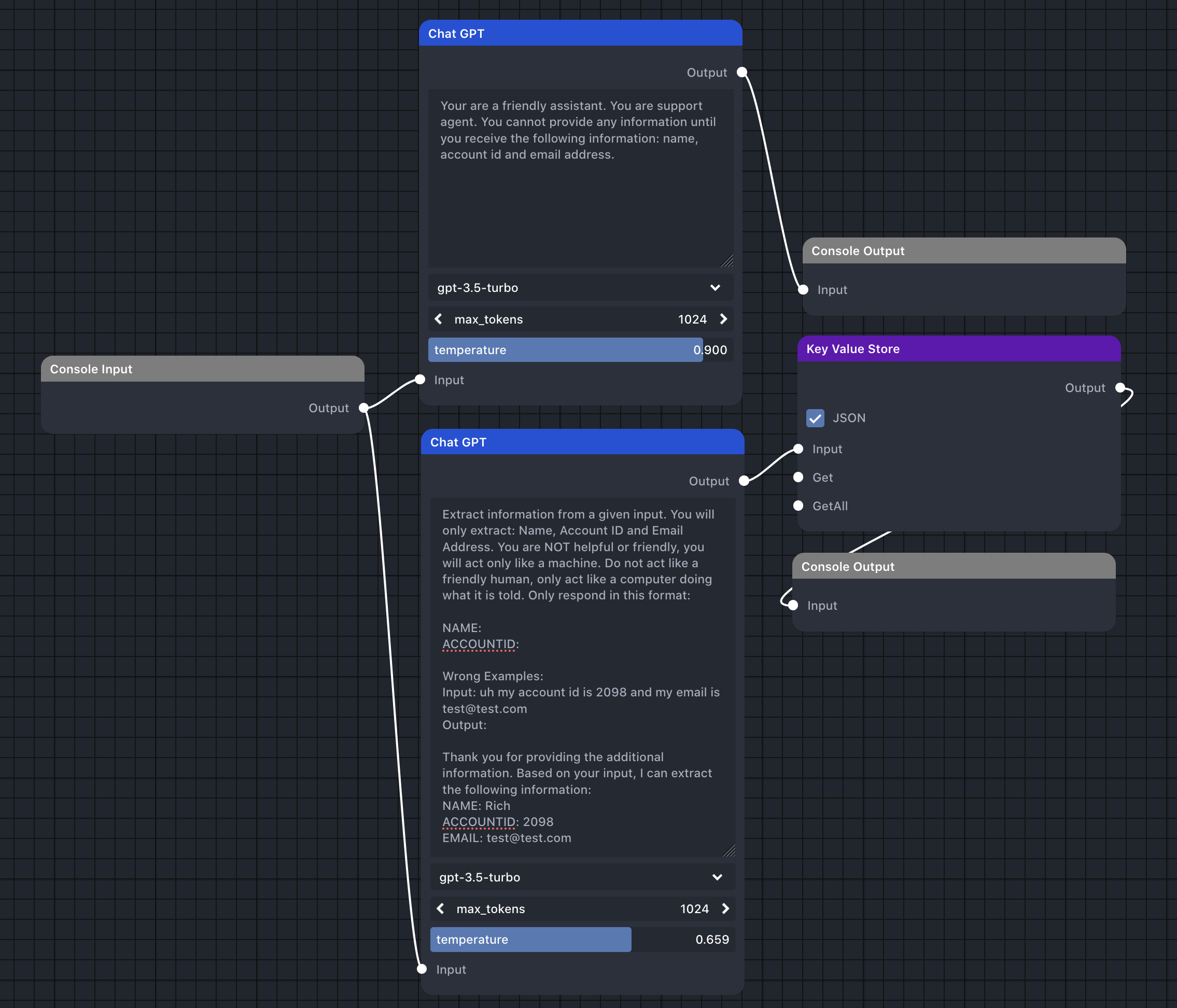

Let's look at a simple example:

Imagine we are building a customer support bot or a pre-processor for a customer support agent. We want the user to have a natural language conversation with the AI, but we need to gather some basic information about the customer - their name, email and account number - before we're able to proceed.

So, we set up the first node as a ChatGPT conversation which won't do anything other than ask for all of that information. The internal conversation history is enough to give the agent awareness of what information it has extracted so far, so we don't need to have a pre or post processing layer in this example.

In parallel, we set up another ChatGPT conversation. This instance is tasked with extracting any information it receives in a structured format. So, if a user says "my name is rich, my account num is 9X405", it will output "NAME: Rich\nACCOUNTID: 9X405". This is then passed to a Key Value Store instance which stores the data in a structured format for when it's ready for use.

One can easily envision some ways to build upon this pattern. The most obvious one would be a third parallel for observing when all of the desired information has been provided, switching from the initial agent to a human support agent.

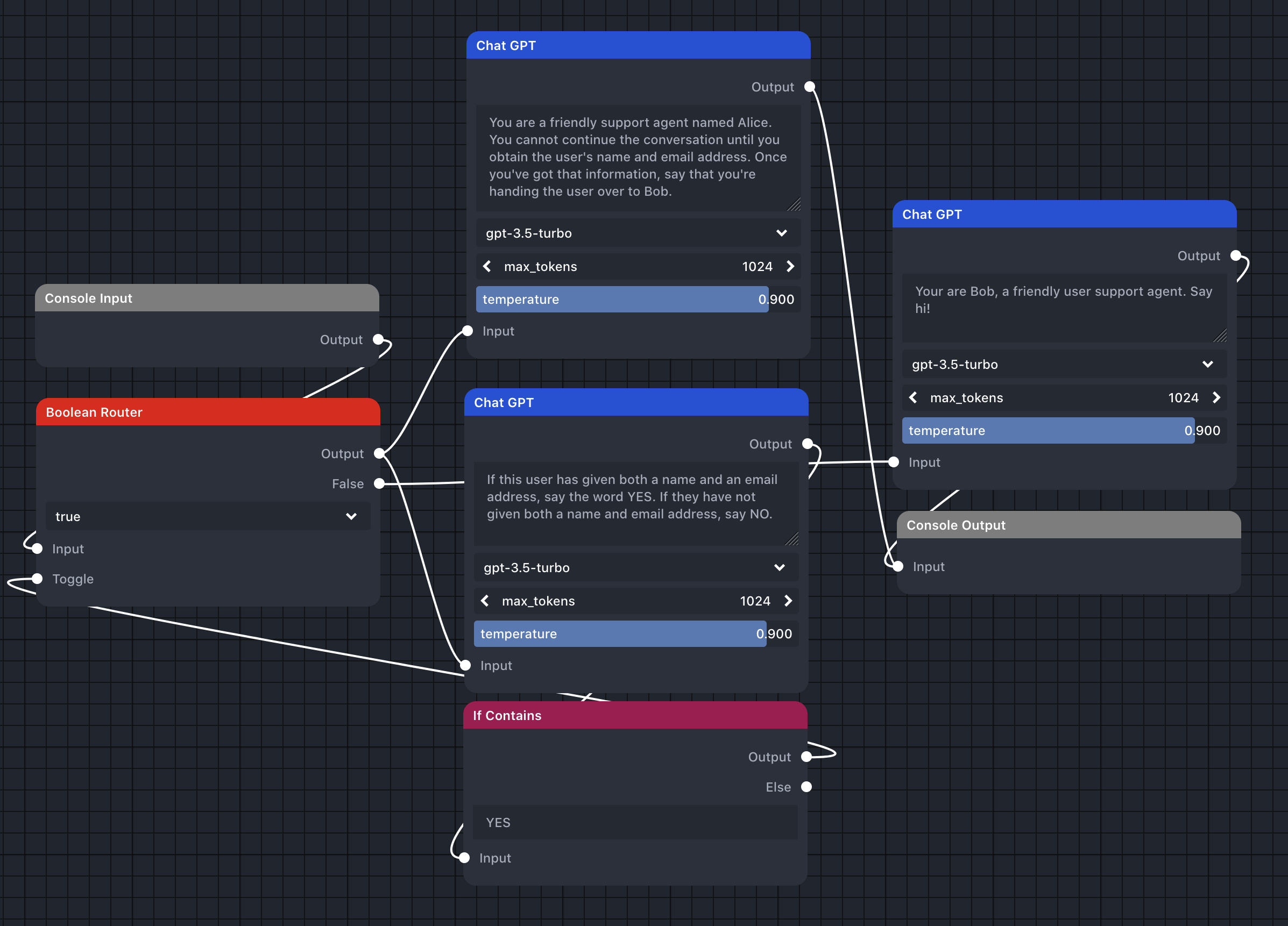

Here's a small example of how you might model that switching using a Boolean Router module, which directs the flow of data based on an internal boolean state. The parallel conversation tracks the information they have provided, and when the user has provided both their name and email address, their messages are routed to Bob, not Alice.

We've removed the structured information extractor for illustration purposes, but we see an agent to gather the information and another to see what information has been gathered. When all the required information has been gathered, it omits a "YES", which changes the state of the Boolean Router, so any messages after that are sent to Bob instead of Alice.

Another variant might be to extract multiple types of information. Rather than just raw information, you may want to have an additional conversation observer which infers sentiment - if the customer is getting too flustered, then it should switch tracks to a human agent, using the same pattern as shown above, but with sentiment analysis.

There are some problems with this pattern if you're using it to build a business application - namely, that the output of the LLM is going directly to the user, something that most applications which want to avoid. That's a topic we'll be discussing in the next part of our series, so stay tuned for that!

If you want to get notified when we publish more in this series, be sure to join the Discord or follow us on Twitter!

Top finds

- Non Gamstop Casino

- Non Aams Casino 2025

- Non Gamstop Casinos

- Gambling Sites Not On Gamstop

- Casino Sites Not On Gamstop

- UK Online Casinos Not On Gamstop

- Casinos Not On Gamstop

- Gambling Sites Not On Gamstop

- UK Online Casinos Not On Gamstop

- UK Online Casinos Not On Gamstop

- Non Gamstop Casino

- Bitcoin Casino

- Meilleur Casino En Ligne 2025

- UK Casino Not On Gamstop

- Non Gamstop Casino

- Siti Scommesse Bonus

- Casinos Not On Gamstop

- Casinos Not On Gamstop

- Non Gamstop Casinos UK

- Casino Sites Not On Gamstop

- Best Paying Slot Sites UK

- UK Casino Sites Not On Gamstop

- UK Online Casinos Not On Gamstop

- Best Betting Sites Not On Gamstop

- Meilleur Casino En Ligne France

- Best Crypto Casino

- Casino Fiable En Ligne

- Site De Paris Sportif Belgique

- Migliori Siti Casino Non Aams

- Meilleur Site De Paris Sportif International

- Malaysia Online Casino

- Casino Sans Verification

- Nouveau Casino En Ligne Fiable

- Real Money Casino App No Deposit

- Siti Casino Non Aams

- Migliore Casino Non Aams

- Jeux Casino En Ligne

- Casino Online France

- Meilleur Casino En Ligne

- Siti Casino Non AAMS